Screen recording is a powerful tool for creating tutorials, demos, gaming videos, and more. PowerDirector, developed by CyberLink, is a versatile video editing software that includes robust screen recording capabilities. This comprehensive guide will take you through every step of capturing screen recordings using PowerDirector, ensuring you can make the most of its features.

Understanding PowerDirector’s Screen Recording Features

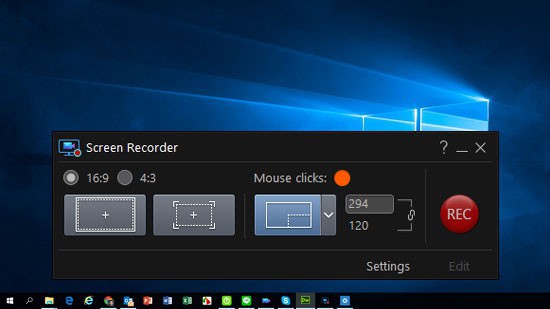

PowerDirector offers an integrated screen recording feature known as the “Screen Recorder.” This tool allows you to capture your computer screen with high-quality video and audio, making it ideal for creating professional-grade content. Here are some key features:

- Full Screen and Custom Region Recording: Record your entire screen or select a specific area.

- Webcam Overlay: Add a webcam feed to your screen recording.

- Audio Recording: Capture system sounds, microphone input, or both.

- Real-Time Drawing Tools: Annotate your screen in real-time during recording.

- Scheduled Recording: Set up automatic recordings at specific times.

Getting Started with Screen Recording in PowerDirector

Step 1: Launch PowerDirector

To begin, open PowerDirector on your computer. If you haven’t installed it yet, download and install the latest version from the CyberLink website. Once installed, launch the program.

Step 2: Access the Screen Recorder

From the main interface of PowerDirector, access the screen recorder by following these steps:

- Switch to Capture Mode: Click on the “Capture” button located at the top of the screen.

- Select Screen Recorder: In the capture interface, choose the “Screen Recorder” option.

Step 3: Configure Screen Recording Settings

Before you start recording, you need to configure your settings. Here’s how:

Recording Area

- Full Screen: Select this option to capture your entire screen.

- Custom: Choose this option to define a specific area of your screen to record. Click and drag to select the desired region.

Audio Settings

- System Audio: Enable this option to record sounds from your computer.

- Microphone: Enable this option to record audio from your microphone. This is useful for narrations or commentary.

Webcam Overlay

If you want to include a webcam feed in your recording:

- Enable the “Webcam” option.

- Select your webcam device from the drop-down menu.

- Adjust the position and size of the webcam overlay on the screen.

Output Settings

- File Format: Choose the file format for your recording (e.g., MP4, AVI).

- Resolution: Select the desired resolution for your video (e.g., 1080p, 720p).

- Frame Rate: Set the frame rate for your recording (e.g., 30fps, 60fps).

Step 4: Set Up Real-Time Annotations

PowerDirector’s Screen Recorder includes real-time drawing tools that allow you to annotate your screen during recording. To use these tools:

- Enable the “Drawing Tool” option.

- Choose from various drawing tools like pens, highlighters, and shapes.

- Select the color and size of your drawing tool.

Step 5: Start Recording

Once you’ve configured all your settings, you’re ready to start recording:

- Record Button: Click the “Record” button to begin capturing your screen.

- Countdown: A countdown timer will appear, giving you a few seconds to prepare.

- Recording in Progress: The recording toolbar will appear, indicating that recording is in progress.

Step 6: Pause and Resume Recording

During your recording, you may need to pause and resume:

- Pause: Click the “Pause” button on the recording toolbar to temporarily halt recording.

- Resume: Click the “Resume” button to continue recording.

Step 7: Stop Recording

When you’re done recording, click the “Stop” button on the recording toolbar. Your recording will be saved to the specified location on your computer.

Editing Your Screen Recording in PowerDirector

After capturing your screen recording, you can enhance it using PowerDirector’s powerful editing tools. Here’s how to edit your recording:

Step 1: Import the Recording

- Media Room: Click on the “Media Room” tab in the top left corner.

- Import Media: Click the “Import Media” button and select your screen recording file.

Step 2: Add to Timeline

Drag and drop your screen recording from the Media Room to the timeline. This will allow you to make precise edits and adjustments.

Step 3: Trim and Split

To remove unwanted sections from your recording:

- Trim: Select the recording on the timeline. Drag the edges of the clip to trim the beginning or end.

- Split: Place the playhead at the point where you want to split the clip. Click the “Split” button to divide the clip into two parts.

Step 4: Add Transitions

To make your video flow smoothly, add transitions between clips:

- Transition Room: Click on the “Transition Room” tab.

- Choose Transition: Browse through various transition effects and drag your desired transition to the timeline between clips.

Step 5: Insert Text and Titles

Enhance your recording with text and titles:

- Title Room: Click on the “Title Room” tab.

- Add Title: Drag a title template to the timeline and customize the text, font, color, and animation.

Step 6: Apply Effects and Enhancements

PowerDirector offers a wide range of effects to enhance your recording:

- Effect Room: Click on the “Effect Room” tab.

- Apply Effect: Drag and drop effects onto your clip in the timeline to apply them.

Step 7: Add Background Music

To add background music to your recording:

- Music Room: Click on the “Music Room” tab.

- Import Music: Import your music file and drag it to the audio track in the timeline.

Step 8: Adjust Audio Levels

Ensure your audio levels are balanced:

- Audio Mixing Room: Click on the “Audio Mixing Room” tab.

- Adjust Levels: Use the sliders to adjust the volume of different audio tracks.

Step 9: Preview Your Video

Before finalizing your video, preview it to ensure everything looks and sounds perfect:

- Preview Window: Use the preview window to watch your video.

- Make Adjustments: If necessary, make further adjustments to your clips, effects, or audio.

Step 10: Produce and Export

Once you’re satisfied with your edited video, it’s time to produce and export it:

- Produce: Click on the “Produce” tab.

- Choose Format: Select your desired output format (e.g., MP4, AVI).

- Select Profile: Choose a preset profile based on your preferred resolution and quality.

- Start: Click the “Start” button to begin exporting your video.

Advanced Tips for Screen Recording in PowerDirector

Tip 1: Optimize Performance

To ensure smooth screen recordings, close unnecessary applications and processes running on your computer. This will free up system resources and prevent lag during recording.

Tip 2: Use Hotkeys

PowerDirector allows you to use hotkeys for quick access to recording functions. Customize hotkeys for starting, pausing, and stopping recordings for a more efficient workflow.

Tip 3: Plan Your Recording

Before you start recording, plan your content. Create a script or outline to ensure a clear and concise presentation. This will help you stay focused and avoid unnecessary pauses or mistakes.

Tip 4: Test Your Setup

Conduct a test recording to check your audio and video settings. Make sure your microphone is working correctly, and the recording area is properly defined.

Tip 5: Utilize Real-Time Drawing Tools

Make use of the real-time drawing tools to highlight important points during your recording. This is especially useful for tutorials and educational content.

Tip 6: Record in Segments

If your recording is lengthy, consider recording in segments. This makes it easier to manage and edit your footage, as well as reduce the risk of errors.

Tip 7: Save Regularly

Save your project regularly to avoid losing your work. PowerDirector has an auto-save feature, but it’s a good practice to manually save as well.

Tip 8: Backup Your Files

Keep backups of your raw recordings and project files. This ensures you have a copy in case of data loss or corruption.

Troubleshooting Common Issues

Issue 1: Laggy or Choppy Recording

- Solution: Close unnecessary applications, reduce recording resolution, and ensure your computer meets PowerDirector’s system requirements.

Issue 2: No Audio Recorded

- Solution: Check your audio settings to ensure the correct input devices are selected. Make sure your microphone and system audio are enabled.

Issue 3: Webcam Not Detected

- Solution: Ensure your webcam is properly connected and recognized by your computer. Update your webcam drivers if necessary.

Issue 4: Recording Area Not Defined Correctly

- Solution: Double-check your custom region settings and make sure the correct area is selected for recording.

Issue 5: Exported Video Quality is Poor

- Solution: Adjust your output settings to a higher resolution and bitrate. Ensure you’re exporting in a suitable format for your intended use.

Conclusion

Capturing screen recordings in PowerDirector is a straightforward process that offers powerful tools for creating high-quality videos. By following this comprehensive guide, you can effectively utilize PowerDirector’s screen recording features, edit your recordings with precision, and produce professional-grade content. Whether you’re creating tutorials, presentations, or gaming videos, PowerDirector provides all the necessary tools to make your screen recording projects a success. Happy recording!