Managing foreign currency transactions in QuickBooks is essential for businesses that operate internationally or deal with clients and suppliers in different countries. QuickBooks’ multi-currency feature allows you to handle transactions in multiple currencies, ensuring accurate financial records and compliance with international accounting standards. This comprehensive guide will walk you through setting up and managing foreign currency transactions in QuickBooks Online and QuickBooks Desktop, from initial setup to advanced reporting and troubleshooting.

Benefits of Handling Foreign Currency Transactions in QuickBooks

- Accurate Financial Records: Maintain precise financial records by recording transactions in the appropriate currency.

- Simplified Currency Conversion: Automatically convert foreign transactions to your home currency using up-to-date exchange rates.

- Enhanced Reporting: Generate comprehensive reports that reflect your global financial activities.

- Compliance: Ensure compliance with international accounting standards and regulations.

- Better Cash Flow Management: Monitor and manage cash flow across different currencies.

Setting Up Multi-Currency in QuickBooks Online

Step 1: Enable Multi-Currency Feature

- Log in to QuickBooks Online: Use your credentials to access your QuickBooks Online account.

- Navigate to Account and Settings: Click on the Gear icon (⚙️) at the top right corner and select “Account and Settings.”

- Go to Advanced Settings: In the left-hand menu, select “Advanced.”

- Enable Multi-Currency: Scroll down to the “Currency” section and turn on the Multi-currency feature.

- Confirm Activation: Read the disclaimer and confirm that you want to enable multi-currency. This action cannot be undone.

- Save Changes: Click “Save” to apply the changes.

Step 2: Set Home Currency

- Home Currency: Ensure your home currency is set correctly in the “Currency” section of the Advanced Settings.

- Save Settings: Confirm and save any changes.

Adding Foreign Currencies

Step 1: Access Currencies List

- Navigate to Currencies: Click on the Gear icon (⚙️) and select “Currencies” under the “Lists” section.

- Add New Currency: Click the “Add currency” button.

Step 2: Select Currency

- Choose Currency: Select the foreign currency you need from the drop-down menu.

- Save: Click “Add” to save the new currency.

Setting Up Foreign Currency Customers and Vendors

Step 1: Create Foreign Currency Customers

- Add Customer: Navigate to the “Sales” menu, select “Customers,” and click “New customer.”

- Enter Customer Details: Fill in the customer’s information, including name, address, and contact details.

- Select Currency: Choose the foreign currency for this customer from the “Currency” drop-down menu.

- Save: Click “Save” to add the customer.

Step 2: Create Foreign Currency Vendors

- Add Vendor: Navigate to the “Expenses” menu, select “Vendors,” and click “New vendor.”

- Enter Vendor Details: Fill in the vendor’s information, including name, address, and contact details.

- Select Currency: Choose the foreign currency for this vendor from the “Currency” drop-down menu.

- Save: Click “Save” to add the vendor.

Recording Foreign Currency Transactions

Sales and Invoicing

- Create Invoice: Navigate to the “Sales” menu, select “Invoices,” and click “New invoice.”

- Select Customer: Choose the foreign currency customer from the customer list.

- Enter Details: Fill in the invoice details, including items, amounts, and due date.

- Currency Conversion: QuickBooks will automatically convert the invoice amount to your home currency based on the current exchange rate.

- Save and Send: Click “Save and send” to email the invoice to the customer.

Expenses and Bills

- Enter Expense: Navigate to the “Expenses” menu, select “Expenses,” and click “New transaction,” then choose “Expense.”

- Select Vendor: Choose the foreign currency vendor from the vendor list.

- Enter Details: Fill in the expense details, including account, amount, and payment method.

- Currency Conversion: QuickBooks will automatically convert the expense amount to your home currency based on the current exchange rate.

- Save: Click “Save” to record the expense.

Managing Exchange Rates

Automatic Exchange Rates

- Default Setting: QuickBooks automatically updates exchange rates daily based on the current market rates.

- View Exchange Rates: Navigate to the “Currency” list to view current exchange rates for each foreign currency.

Manual Exchange Rate Adjustments

- Edit Exchange Rate: If necessary, you can manually adjust the exchange rate for a specific transaction. Open the transaction, click on the exchange rate, and enter the new rate.

- Save Changes: Confirm and save the changes to apply the new exchange rate.

Reconciling Foreign Currency Accounts

Step 1: Access Reconciliation

- Navigate to Reconciliation: Go to the “Accounting” menu and select “Reconcile.”

- Select Account: Choose the foreign currency account you want to reconcile.

Step 2: Reconcile Transactions

- Enter Statement Information: Enter the ending balance and statement date from your bank statement.

- Match Transactions: Match the transactions in QuickBooks with those on your bank statement.

- Adjust for Differences: If there are discrepancies due to exchange rate fluctuations, record adjustments as needed.

- Complete Reconciliation: Once all transactions are matched and any differences are accounted for, click “Finish now” to complete the reconciliation.

Generating Reports for Foreign Currency Transactions

QuickBooks offers various reports to help you analyze and manage your foreign currency transactions.

Common Reports

- Profit and Loss by Currency: Shows income and expenses categorized by currency.

- Balance Sheet by Currency: Displays your financial position with assets, liabilities, and equity categorized by currency.

- Unrealized Gains and Losses: Reflects gains or losses due to exchange rate fluctuations on outstanding foreign currency transactions.

- Aging Reports: Shows aging of receivables and payables in foreign currencies.

Customizing Reports

- Filter by Currency: Use filters to view reports for specific currencies.

- Adjust Report Dates: Set custom date ranges to analyze transactions over specific periods.

- Save Custom Reports: Save customized reports for future use and set up scheduled email delivery if needed.

Advanced Techniques for Handling Foreign Currency

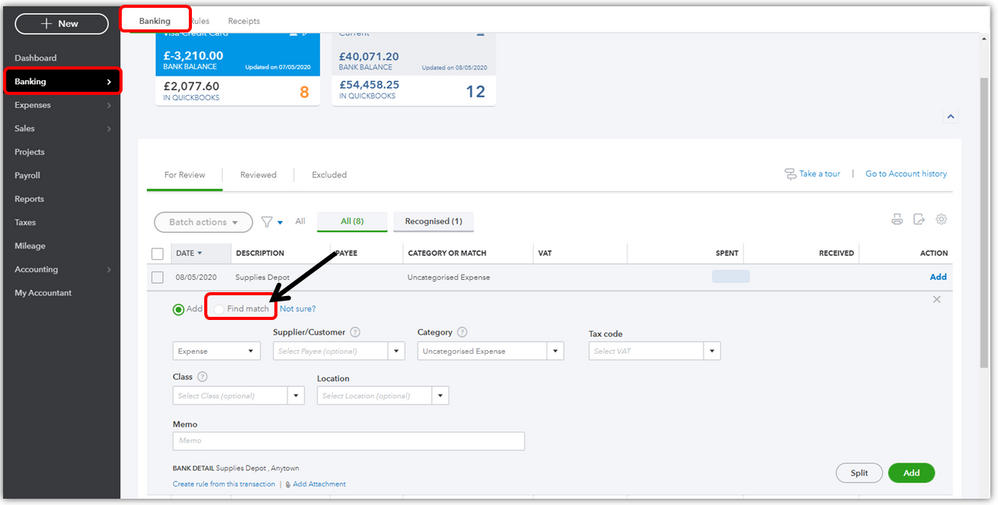

Multi-Currency Banking

- Set Up Foreign Currency Accounts: Create bank accounts in different currencies to manage cash flow more effectively.

- Record Transfers: Use the “Transfer” feature to move funds between accounts in different currencies, ensuring accurate tracking and conversion.

- Monitor Balances: Regularly monitor balances in your foreign currency accounts to manage liquidity.

Handling Unrealized Gains and Losses

- Record Adjustments: Use journal entries to record unrealized gains and losses due to currency fluctuations at the end of each reporting period.

- Review Reports: Regularly review the Unrealized Gains and Losses report to understand the impact of currency fluctuations on your financials.

Troubleshooting Common Issues

Issue 1: Incorrect Exchange Rates

- Verify Exchange Rates: Ensure that the exchange rates in QuickBooks match the current market rates.

- Adjust Manually: Manually adjust exchange rates for specific transactions if discrepancies are found.

Issue 2: Transactions Not Matching Bank Statements

- Check Reconciliation: Ensure that all foreign currency transactions are correctly matched during reconciliation.

- Record Adjustments: Make necessary adjustments for any differences due to exchange rate fluctuations.

Issue 3: Unrealized Gains and Losses Not Recorded

- Set Up Periodic Reviews: Establish a process for periodically reviewing and recording unrealized gains and losses.

- Use Journal Entries: Record journal entries to capture unrealized gains and losses at the end of each reporting period.

Best Practices for Managing Foreign Currency Transactions

1. Regularly Update Exchange Rates

- Automatic Updates: Rely on QuickBooks’ automatic exchange rate updates for accuracy.

- Manual Adjustments: Manually adjust exchange rates for specific transactions if needed.

2. Monitor Cash Flow

- Track Balances: Keep an eye on balances in foreign currency accounts to ensure liquidity.

- Manage Transfers: Regularly transfer funds between accounts to manage cash flow effectively.

3. Regularly Reconcile Accounts

- Monthly Reconciliation: Reconcile foreign currency accounts monthly to ensure accuracy.

- Adjust for Differences: Record adjustments for any discrepancies due to exchange rate fluctuations.

4. Generate and Review Reports

- Regular Reporting: Generate and review reports regularly to monitor the impact of foreign currency transactions on your financials.

- Custom Reports: Customize reports to meet your specific business needs and analyze data effectively.

Conclusion

Handling foreign currency transactions in QuickBooks is essential for businesses that operate internationally or deal with multiple currencies. By setting up and managing foreign currency transactions effectively, you can ensure accurate financial records, comply with international accounting standards, and make informed business decisions. This comprehensive guide has covered everything from enabling the multi-currency feature and adding foreign currencies to managing transactions, reconciling accounts, generating reports, and troubleshooting common issues. By following best practices and leveraging QuickBooks’ powerful features, you can streamline your multi-currency operations and drive your business toward global success.